Best Practices for Securing Data Used to Train & Operate AI Systems

On May 22, 2025, the NSA released the Cybersecurity Information Sheet on AI Data Security. This CSI provides an introduction to the emerging risks as companies integrate AI into software development, business processes, and workflows.

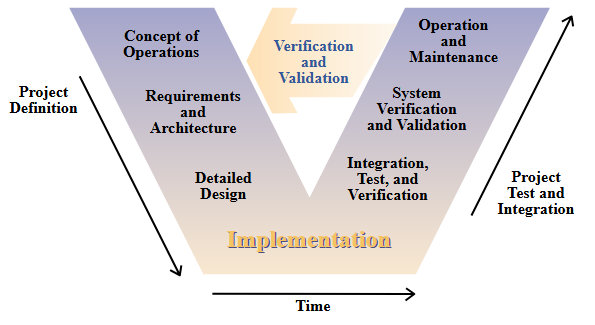

AI System Lifecycle

The guidance document starts out with a description of the AI system lifecycle. The 6 stages identified map well to the conventional software development lifecycle. Instructions align to the V-Model and formal software development lifecycle standards such as IEC-62304.

- Plan and Design => Concept Design

- Collect and Process Data => Development

- Build and Use Model => Integration

- Verify and Validate => Testing

- Deploy and Use => Deployment and Release

- Operate and Monitor => Support

Given the parallels, most organizations will find integrating AI development with established software practices and procedures to be straightforward and incremental.

AI System Risks

The core of the advisory focuses on the risks associated with AI data security. The risks identified fall into three broad categories.

- Data Supply Chain: Ensuring the validity and integrity of data.

- Data Poisoning: Preventing malicious manipulation of data.

- Data Drift: Changes to the underlying statistical data.

Data of Unknown Provenance

Organizations developing safety-critical systems will be familiar with managing risks related to SOUP (Software of Unknown Provenance). Integrating AI functionality adds the origins and integrity of data (DOUP?) to the risk profile. Data sources need to be tracked and validated. Data utilized in the application must be protected against manipulation.

Protecting the Well

The scale of data used in AI systems makes them susceptible to an array of injection attacks. The intentional insertion of inaccurate, biased, misleading, or outright malicious information is a serious risk in iteratively updating and learning systems. When 51% of Internet traffic is automated, consider the potential exposure of a reinforcement learning system using clicked results.

Everything We Know has an Expiration Date

Data drift refers to the changes in the underlying statistical properties of the input data to an operational AI system. Data sets commonly correspond to a moment in time, and the world doesn’t stand still (Reality Drift?). Depending on the domain, the data may be suitable for a time capsule or a compost pile. Even facts have a half-life. Data sets rarely age like a fine wine.

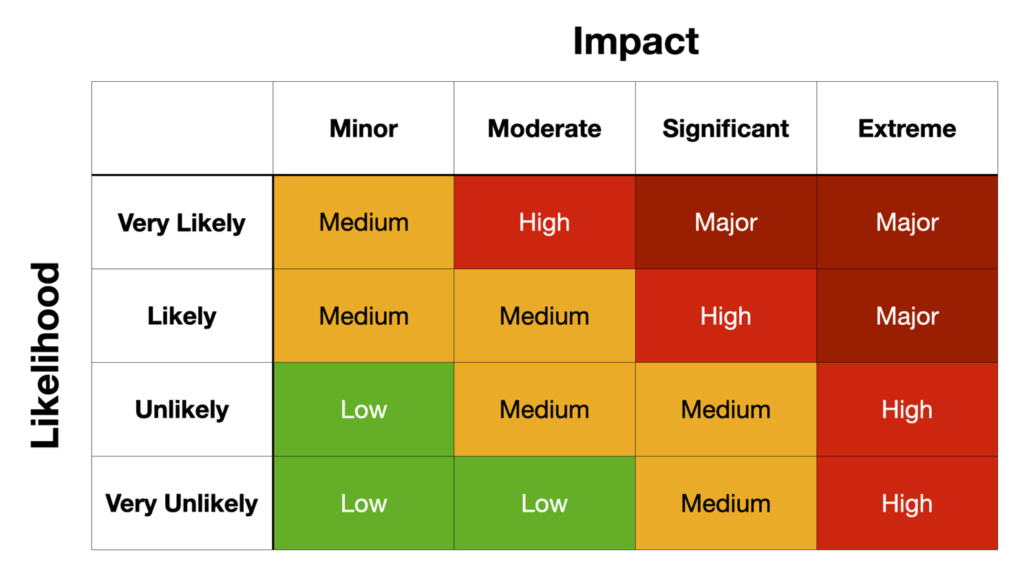

AI Risk Management

Risk levels for AI are domain dependent. Suggesting a connection to “someone you may know” is on a completely different level than generating a medical diagnosis. Fortunately, existing risk management frameworks incorporate processes and tools for identifying, assessing the impact, and estimating the likelihood of occurrence. Determine the acceptable threshold and apply mitigations appropriate for the problem domain.

For organizations new to risk management in software applications, Aron Lange published a great overview of ISO:27005 on LinkedIn.

Recommendations

- Read the Guidance

- Apply Best Practices

- Map AI data security to the software development lifecycle

- Integrate data with configuration management

- Apply encryption at rest and in transit

- Integrate hashing to verify data integrity

- Risk Management

- Add AI considerations to the risk management procedure

- Establish an acceptable risk threshold and apply mitigations